Yea, the “cheaper than droids” line in Andor feels strangely prescient ATM.

A little bit of neuroscience and a little bit of computing

Yea, the “cheaper than droids” line in Andor feels strangely prescient ATM.

Not a stock market person or anything at all … but NVIDIA’s stock has been oscillating since July and has been falling for about a 2 weeks (see Yahoo finance).

What are the chances that this is the investors getting cold feet about the AI hype? There were open reports from some major banks/investors about a month or so ago raising questions about the business models (right?). I’ve seen a business/analysis report on AI, despite trying to trumpet it, actually contain data on growing uncertainties about its capability from those actually trying to implement, deploy and us it.

I’d wager that the situation right now is full a lot of tension with plenty of conflicting opinions from different groups of people, almost none of which actually knowing much about generative-AI/LLMs and all having different and competing stakes and interests.

Yea I know, which is why I said it may become a harsh battle. Not being in education, it really seems like a difficult situation. My broader point about the harsh battle was that if it becomes well known that LLMs are bad for a child’s development, then there’ll be a good amount of anxiety from parents etc.

Yea, this highlights a fundamental tension I think: sometimes, perhaps oftentimes, the point of doing something is the doing itself, not the result.

Tech is hyper focused on removing the “doing” and reproducing the result. Now that it’s trying to put itself into the “thinking” part of human work, this tension is making itself unavoidable.

I think we can all take it as a given that we don’t want to hand total control to machines, simply because of accountability issues. Which means we want a human “in the loop” to ensure things stay sensible. But the ability of that human to keep things sensible requires skills, experience and insight. And all of the focus our education system now has on grades and certificates has lead us astray into thinking that the practice and experience doesn’t mean that much. In a way the labour market and employers are relevant here in their insistence on experience (to the point of absurdity sometimes).

Bottom line is that we humans are doing machines, and we learn through practice and experience, in ways I suspect much closer to building intuitions. Being stuck on a problem, being confused and getting things wrong are all part of this experience. Making it easier to get the right answer is not making education better. LLMs likely have no good role to play in education and I wouldn’t be surprised if banning them outright in what may become a harshly fought battle isn’t too far away.

All that being said, I also think LLMs raise questions about what it is we’re doing with our education and tests and whether the simple response to their existence is to conclude that anything an LLM can easily do well isn’t worth assessing. Of course, as I’ve said above, that’s likely manifestly rubbish … building up an intelligent and capable human likely requires getting them to do things an LLM could easily do. But the question still stands I think about whether we need to also find a way to focus more on the less mechanical parts of human intelligence and education.

Sure, but IME it is very far from doing the things that good, well written and informed human content could do, especially once we’re talking about forums and the like where you can have good conversations with informed people about your problem.

IMO, what ever LLMs are doing that older systems can’t isn’t greater than what was lost with SEO ads-driven slop and shitty search.

Moreover, the business interest of LLM companies is clearly in dominating and controlling (as that’s just capitalism and the “smart” thing to do), which means the retention of the older human-driven system of information sharing and problem solving is vulnerable to being severely threatened and destroyed … while we could just as well enjoy some hybridised system. But because profit is the focus, and the means of making profit problematic, we’re in rough waters which I don’t think can be trusted to create a net positive (and haven’t been trust worthy for decades now).

I really think it’s mostly about getting a big enough data set to effectively train an LLM.

I mean, yes of course. But I don’t think there’s any way in which it is just about that. Because the business model around having and providing services around LLMs is to supplant the data that’s been trained on and the services that created that data. What other business model could there be?

In the case of google’s AI alongside its search engine, and even chatGPT itself, this is clearly one of the use cases that has emerged and is actually working relatively well: replacing the internet search engine and giving users “answers” directly.

Users like it because it feels more comfortable, natural and useful, and probably quicker too. And in some cases it is actually better. But, it’s important to appreciate how we got here … by the internet becoming shitter, by search engines becoming shitter all in the pursuit of ads revenue and the corresponding tolerance of SEO slop.

IMO, to ignore the “carnivorous” dynamics here, which I think clearly go beyond ordinary capitalism and innovation, is to miss the forest for the trees. Somewhat sadly, this tech era (approx MS windows '95 to now) has taught people that the latest new thing must be a good idea and we should all get on board before it’s too late.

I mean, their goal and service is to get you to the actual web page someone else made.

What made Google so desirable when it started was that it did an excellent job of getting you to the desired web page and off of google as quickly as possible. The prevailing model at the time was to keep users on the page for as long as possible by creating big messy “everything portals”.

Once Google dropped, with a simple search field and high quality results, it took off. Of course now they’re now more like their original competitors than their original successful self … but that’s a lesson for us about what capitalistic success actually ends up being about.

The whole AI business model of completely replacing the internet by eating it up for free is the complete sith lord version of the old portal idea. Whatever you think about copyright, the bottom line is that the deeper phenomenon isn’t just about “stealing” content, it’s about eating it to feed a bigger creature that no one else can defeat.

So … can we like finally dismiss Google Chrome as the obviously awful idea it is and which should never have made it this far and remind all of the web devs married to it that they’re doing bad things and are the reason why we can’t have nice things?

Hmmm … a web browser owned by a monopolistic advertising company … how could that possibly go wrong!!!

We wouldn’t need AI in the first place if we could just find the information we need.

I’ve commented on this before … but it almost seems like that is the point, or an opportunistic moment for Google … turn the internet to shit so that we “need” the AI and that Google have a new business to grow into. Capitalism at its finest.

Also there’s a fair bit of effort going in to making local LLMs.

Yea it’s definitely interesting but my gut feeling is that open source or local LLMs (like llama) are false hope against the broader dynamics. Surely with greater Google-level resources comes ‘better’ and more convenient AI. I’d bet that open/local LLMs will end up like Linux Desktop: meaninglessly small technical user base with no anti-monopoly effects at all. Which, to open the issue up to “capitalism!!”, raises the general issue of how individualistic rather than organisational actions can be ineffectual.

The insidious part missing here is that AI search destroys the internet. You no longer search for other people’s pages or content … you simply search for “the answer”.

Sure there might be links and footnotes, but the whole product is to reconstitute the internet into something Google (or whoever) own and control from top to bottom. That is the death of the internet and some of the values which built it in the first place.

Ideally for Google, we all become “information or content” serfs to their AI “freehold”. Every “conversation” we have with the AI or otherwise is more training data. Every post or article or report or paper is just data for the AI which we provide as service to suckle at the great “AI search”.

And lets not fool ourselves into thinking that there isn’t real and convincing convenience in something like this. It makes sense, so long as AI can be useful enough to justify the easiness of it.

Which is why the real issue isn’t whether AI is “good enough” or “not actually intelligent” … that’s a distraction. The issue is what are the economic implications.

AI is hard to train and to keep up to date and it’s hard to improve on … these are resource intensive tasks. Which means there’s centralisation built right in.

AI consumes and stores data in a destructive way. It destroys or undermines the utility of that data … as you can just use the AI instead … and it is also likely lossy (thus hallucinations etc).

So … centralised data eating technology. If we were talking about liberties or property rights or IP or creativity or the economy … an all eating centralising pattern would be thunderously fearsome. Monarchism, Imperialism or colonialism … monopolisation … complete serfdom. It’s the same type of thing … but “just” for information technology … which is maybe not that significant … except how much are we all using the internet for anything and how much are our livelihoods linked to it in someway?

While there’s a bit of a focus here on what it’d “mean” for Apple to do this … more broadly, which streaming services don’t have ads by now? Prime and Netflix do, I know that much.

Basically it seems the industry has done exactly what cable did IIRC … once they’ve got you by the balls they’ll squeeze you as dry as they can.

Apart from finding a local hardcopy rental shop (I have and am trying to use it as much as possible), or you know, just not watching, “reverting” back to piracy is likely the only way to react here.

Very happy to see it come to wikipedia!!

But I think it also needs some polish. The contrast is too high and the blue on black of the hyperlinks is too garish for sure.

One area that I am somewhat knowledgeable about is image/video upscaling

Oh I believe you. I’ve seen it done on a home machine on old time-lapse photos. It might have been janky for individual photos, but as frames in a movie it easily elevated the footage.

Yea this. It’s a weird time though. All of it is hype and marketing hoping to cover costs by searching for some unseen product down the line … even the original chatGPT feels like a basic marketing stunt: “If people can chat with it they’ll think it’s miraculous however useful it actually is”.

OTOH, it’s easy to forget that genuine progress has happened with this rush of AI that surprised many. Literally the year before AlphaGo beat the world champion no one thought it was going to happen any time soon. And though I haven’t checked in, from what I could tell, the progress on protein folding done by DeepMind was real (however hyped it was also). Whether new things are still coming or not I don’t know, but it seems more than possible. But of course, it doesn’t mean there isn’t a big pile of hype that will blow away in the wind.

What I ultimately find disappointing is the way the mainstream has responded to all of this.

It’s our generation’s cigarettes.

“I don’t know, everyone was just doing it” is what we’ll say and what prior generations have said about smoking everywhere all of the time.

The stimulation from and addiction to nicotine or social dopamine … it’s the same shit. The weird marketing, branding and business capture big tech has now could look just like the marketing and wealth of cigarettes in the past.

Kinda funny, not too long ago it was a fun mental exercise if you were paying attention to the tech industry to try to think of the ways in which Google or MS could fall.

Now, AFAICT, neither are falling any time soon, but there certainly seems to be a shift in how they’re perceived and how their brand sits in the market (where even so I’m still probably in a bubble on this).

But I’m not sure how predictable it would have been that both would look silly stumbling for AI dominance.

And, yea, I’m chalking recall up to the AI race as it seems like a grab for training data to me, and IIRC there were some clues around that this could be true.

So I’ve been ranting lately (as have others) about how big tech is moving on from the open user-driven internet and aiming to build its own new thing as an AI interface to all the hoovered data (rather than conventional search engines) …

which makes this (and the underlying Bing going down) feel rather eerie.

How far away (in time or probability) is a complete collapse of the big search-engines … as in they just aren’t there any more?

LOL (I haven’t actually met someone like that, in part because I’m not a USian and generally not subject to that sort of type ATM … but I am morbidly curious TBH.

Great take.

Older/less tech literate people will stay on the big, AI-dominated platforms getting their brains melted by increasingly compelling, individually-tailored AI propaganda

Ooof … great way of putting it … “brain melting AI propaganda” … I can almost see a sci-fi short film premised on this image … with the main scene being when a normal-ish person tries to have a conversation with a brain-melted person and we slowly see from their behaviour and language just how melted they’ve become.

Maybe we’ll see an increase in discord/matrix style chatroom type social media, since it’s easier to curate those and be relatively confident everyone in a particular server is human.

Yep. This is a pretty vital project in the social media space right now that, IMO, isn’t getting enough attention, in part I suspect because a lot of the current movements in alternative social media are driven by millennials and X-gen nostalgic for the internet of 2014 without wanting to make something new. And so the idea of an AI-protected space doesn’t really register in their minds. The problems they’re solving are platform dominance, moderation and lock-in.

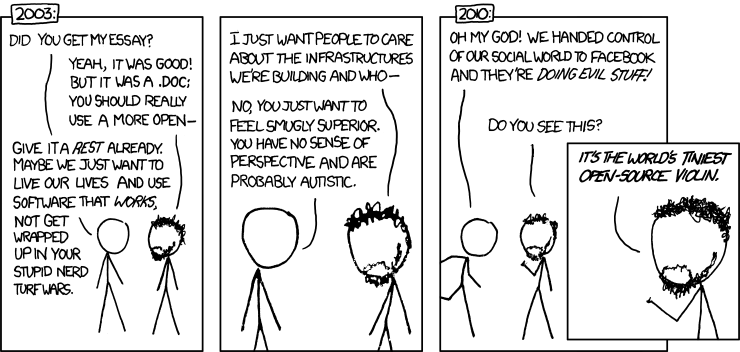

Worthwhile, but in all serious about 10 years too late and after the damage has been done (surely our society would be different if social media didn’t go down the path it did from 2010 onward). Now what’s likely at stake is the enshitification or en-slop-ification (slop = unwanted AI generated garbage) of internet content and the obscuring of quality human-made content, especially those from niche interests. Algorithms started this, which alt-social are combating, which is great.

But good community building platforms with strong privacy or “enclosing” and AI/Bot protecting mechanisms are needed now. Unfortunately, all of these clones of big-social platforms (lemmy included) are not optimised for community building and fostering. In fact, I’m not sure I see community hosting as a quality in any social media platforms at the moment apart from discord, which says a lot I think. Lemmy’s private and local only communities (on the roadmap apparently) is a start, but still only a modification of the reddit model.

Thanks!